MHKD-MVQA: Multimodal Hierarchical Knowledge Distillation for Medical Visual Question Answering

Jianfeng Wang, Shuokang Huang, Huifang Du, Yu Qin, Haofen Wang, Wenqiang Zhang

2022 IEEE International Conference on Bioinformatics and Biomedicine (BIBM) (CCF B)

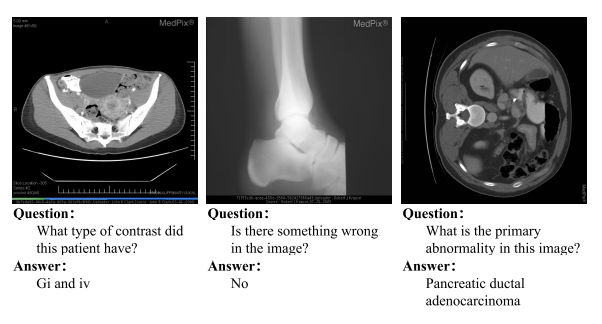

Medical Visual Question Answering (VQA) has emerged as a promising solution to enhance clinic-decision making and patient interactions. Given a medical image and a corresponding question, medical VQA aims to predict an informative answer by reasoning the visual and textual information. However, datasets with limited samples circumscribe the generalization of medical VQA, reducing its accuracy when applied to unseen medical samples. Existing works tried to solve this problem with meta-learning or self-supervised learning but still failed to achieve satisfactory performance on medical VQA with insufficient samples. To address this problem, we propose multimodal hierarchical knowledge distillation for medical VQA (MHKD-MVQA). In the primary novelty of MHKD-MVQA, we distill knowledge from not only the output but also the intermediate layers, which leverages the knowledge from limited samples to a greater extent. Meanwhile, medical images and questions are embedded in a shared latent space, enabling our model to tackle multimodal samples. We evaluate our model on two medical VQA datasets, VQA-MED 2019 and VQA-RAD, where MHKD-MVQA achieves state-of-the-art performance and outperforms baselines by 3.6% and 1.6%, respectively. The extensive experiments also highlight the generalization of knowledge distillation by analyzing the class activation maps on medical images concerning specific questions.